The Challenge: Rigidity in a Dynamic World

Traditional multi-robot systems are rigid, struggling with real-time adaptation in dynamic environments like Search and Rescue (SAR). Predefined logic limits operator control, creating a bottleneck in evolving missions.

Operators face:

- High cognitive load in complex missions.

- Difficulty adapting to unexpected changes.

- Cumbersome interfaces slowing critical decisions.

The Opportunity: Large Language Models (LLMs) offer a new paradigm: understanding natural language to enable flexible, human-robot teams. We're safely merging LLM flexibility with classic algorithmic precision.

Our Vision

Imagine an operator simply stating: "A witness saw something near the northern cliffs. Reassign two drones to perform a low-altitude grid search there, now."

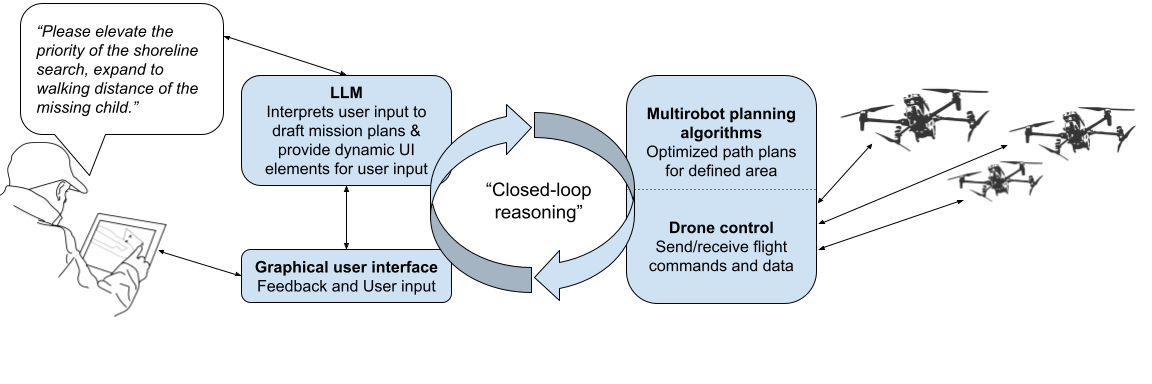

Our system translates this command into an optimized, collision-free flight plan. It presents proposed missions on a map with interactive controls for fine-tuning. This hybrid approach fuses human expertise with AI execution, enabling intuitive, high-level control while ensuring safety and efficiency.

PoC: A New Paradigm for SAR Missions

Our prototype demonstrates how Large Language Models (LLMs) enhance human-multirobot interaction in SAR. By combining natural language processing with multirobot planning, operators issue complex commands, translated into coordinated drone actions like navigation and area coverage.

This hybrid approach empowers operators with high-level control, while the system ensures safety and efficiency, resulting in a more intuitive, flexible interface for dynamic environments.

Core Research Areas

Human-Multirobot Interaction

Designing intuitive interfaces to reduce cognitive load and enhance situational awareness in dynamic environments.

Explainable AI (XAI)

Making system behavior intelligible via natural language for trust and operator understanding in safety-critical contexts.

Multirobot Planning

Advancing robust, adaptive algorithms for task allocation and path planning in real-time SAR missions.

Project Objectives

Seamless LLM-Multirobot Integration

Design closed-loop reasoning algorithms to translate natural language into efficient, safe, and optimized multi-UAV mission plans.

Enhanced Real-time Situational Awareness

Develop robust error-handling and feedback mechanisms for reliable operation and dynamic mission adaptation in unpredictable environments.

Rigorous Experimental Validation

Validate the system in mock SAR missions using physical UAVs and advanced simulations, assessing performance, usability, and cognitive load reduction.

Meet the Research Team

Prof. Anders Lyhne Christensen

Project PI, SDU

Expert in multirobot systems, planning, and coordination of large-scale robotic systems.

Assoc. Prof. Timothy Merritt

Co-PI, AAU

Specialist in human-drone interaction, conversational AI, and human-AI teaming.

Alejandro Jarabo Peñas

PhD Student

Designs system architecture, integrates LLMs with planning algorithms. Background: Telecom Engineering (UPM), Robotics (KTH).

Assoc. Prof. Nathan Lau

Collaborator, Virginia Tech

Expert in ecological interface design and human-in-the-loop systems for UAV-based SAR.

Prof. Satoshi Tadokoro

Advisor, Tohoku University

Renowned expert with over 25 years of experience in SAR and disaster robotics.

CEO Kenneth Geipel

Partner, Robotto

Industry leader in developing UAV solutions for SAR, firefighting, and conservation.